“A good ad creative” — what exactly does that look like? There is no set answer for this, it’s always different. After all, “good” is a very subjective definition. How to make sure that your understanding of good matches with your potential audience’s one? For example, you can do so with ad creative testing. In this article, experts from the performance marketing agency AdQuantum show you how to get ready for this testing (not to be confused with A/B testing), how to conduct it efficiently and use the testing results correctly.

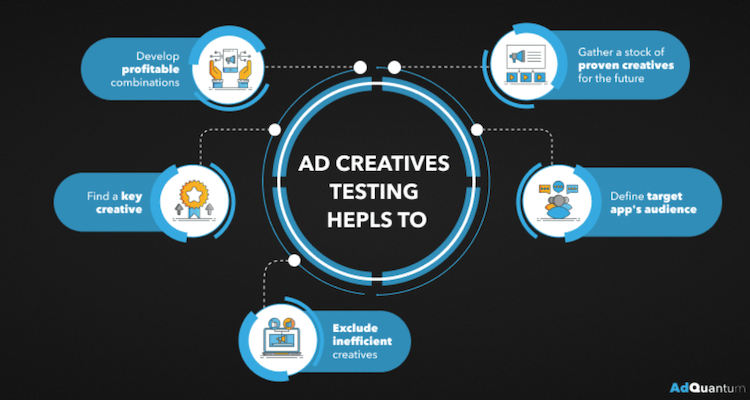

Why tests are conducted

Testing is often neglected by mobile marketing teams because it requires extra time and investment. Of course, it is much easier and faster to immediately start running ads without undertaking extra steps. But it’s much better not to ignore testing. These tests will save you nerves, money, and time in the long run. And here’s why:

- Testing helps eliminate 80% of ineffective creatives, saving human and financial resources.

- Define the best ad creatives among all successful ones, and make the most of them;

- Find the combinations bringing profit;

- Make out what kind of audience you are dealing with: what they are interested in and what topics they could be hooked on to;

- Avoid a situation where the creative has “burnt out” and there is nothing to replace it with. The more creatives you have in stock, the better the volume of ads that are being run.

What to test and how to conduct the testing

It is not always possible to determine what exactly catches a user’s attention in a particular ad, so you can test literally anything in creatives. In addition to testing the creative concept itself, multiple details can be put to the test. It can be composition, contrast, the number of key elements, the text on the creative, colors, the look of the Call to Action (CTA) button, timing of the video. And this is not a complete list.

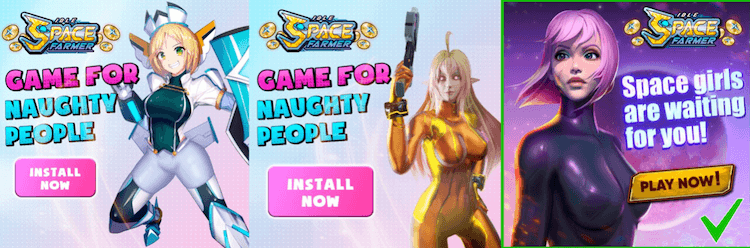

For example, in the creatives for the mobile game, Idle Space Farmer, we tested the rendering style, color, text, and shape of the CTA button, additional text on the creative, the girl’s appearance, her location, and the background. The below collage demonstrates the initial, intermediate, and final versions of the creative made from the same hypothesis. It was testing that helped us determine what engages the audience best.

On average, testing lasts for 1-2 days. This is enough time to get the first metrics that would show you how to proceed. The number of testing iterations depends on how many creatives are being tested simultaneously.

In terms of money, testing usually takes up to 15% of the total ad budget. Thus, even if tests show poor results, you do not lose in the overall campaign performance. After all, the testing budget is never wasted. For the amount of money spent, you always gain invaluable experience. Already after the first test iteration, you obtain an understanding of which ads actually interest your target audience and which don’t.

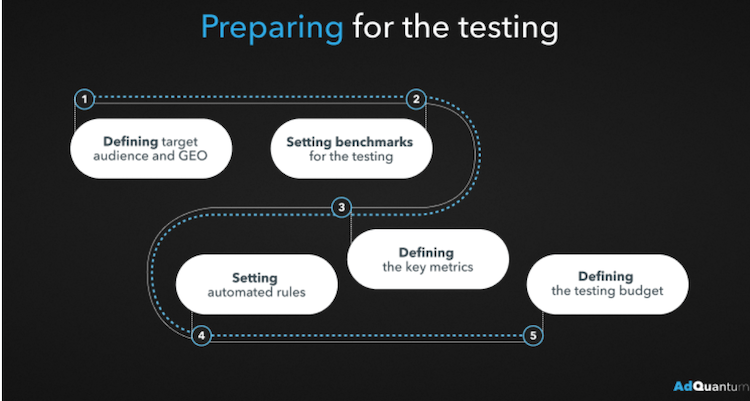

How to prepare for the testing

In order not to be flying blind and get the most out of the creative tests, you need to properly prepare for them. These are the points to stick to for getting objective results and design the most efficient ad creatives:

- Select the audience and GEO that you will be testing your creatives on. It is worth choosing those options that you will be working on after conducting the testing.

- Set the testing criteria — benchmarks. Moreover, you need to use the same audience and optimization settings for all creatives.

- Define the key metric that will be an indicator saying if the creative passed the test or not. It’s better to focus on Click-through rate (CTR), Install rate (IR), Conversion rate (CR), or Retention rate (RR). If the project has already worked with user acquisition before, you should set benchmarks. If the project is completely new and there is no data on it yet, you should focus on internal benchmarks for other apps of similar verticals.

- Set internal auto-rules to exclude a creative from the rotation for a long period if the metric that you are focused on is out of range. But you mostly face this stage in case the advertising platform does not support auto-rules.

- Determine a budget for the testing. The budget and the volume of traffic must allow you to get the minimum statistically significant result.

The proper order for testing creatives

There are 3 options for creatives testing: simultaneously, sequentially, and with A/B testing.

You save precious time when you test ad creatives all at once. When you have a huge amount of creatives done and ready to be launched, it would be better to test sequentially.

It is controversial how effective the simultaneous running of a large number of creatives is. Let’s say you are running a Facebook ad campaign. Out of all creatives, Facebook algorithms will choose only a few top ones for further running. They will win and consume all the available traffic. And there cannot be a large number of creatives in the auction at the same time. One way or another, this is always a rotation: the old dies and gives way to new, fresh approaches.

AdTech is changing — it’s time to find out how

AI, privacy shifts, and new monetization models are transforming the app industry. Learn more about these changes and other app marketing insights in the AdTech Trends 2025 Playbook by Yango Ads.

[Download Playbook]If we are talking about a large number of ads in an ad campaign (up to 50), sometimes this works even better than running 5-7 creatives. But you cannot predict in which case it will work. Either way, you should test. There are no clear patterns with this option, but mostly it relates to gaming projects.

Automatic or manual testing?

Let’s say Facebook is your main traffic source. If you upload several ad creatives to the ad set, the social network will direct most of the traffic to the ones that, according to Facebook algorithms, would bring the most conversions. Sure, this is convenient and optimizes the work of UA managers, but with this test format, there is a risk of missing out on potentially good creatives, simply because they didn’t get enough traffic. Creatives can lose out on traffic just because inside the ad set next to them, there was a “stronger” creative, according to internal Facebook algorithms.

That is why it is important to test manually, directing traffic to creatives separately, to estimate key metrics. And only then, after having collected several successful hypotheses, to combine them in full-scale ad campaigns.

At the stage of testing and searching for a working hypothesis, only manual campaign running is used. When additional insights emerge based on effective creatives, dynamic creatives, the internal functionality of Facebook, are involved in the work. This tool automatically creates new setups: image/video + text + headline + CTA. It then optimizes ads on its own and only shows the best-performing ads. As a result, you can see the statistics — in which creative, which element worked better than the others.

Throughout the entire ad campaign, two approaches are combined. Sometimes it is impossible to work without manual testing, otherwise, you will not understand what actually performs. But sometimes it is better to use automation since there is simply no point in wasting the buyer’s time.

How to evaluate test results

We analyze the test results in the same way as we set benchmarks:

- Compare the result of test creatives with the average values of metrics for the project;

- Evaluate the significance of the data obtained. It’s important not to make decisions about $10 spend or 2 conversions;

- If a creative meets the benchmarks, we conduct the second test. This will allow you to eliminate the possibility of randomness.

- Finally, launch a proven creative and start driving high volumes of traffic from it.

How has testing changed since iOS 14.5+ launched?

Too little time has passed to draw objective conclusions: not all users have switched to iOS 14.5+ yet, and not that much time has passed so far. But the performance of iOS UA campaigns has changed significantly. All data is now only available at the campaign level, not on the creatives level.

If each of the ad groups is targeted at different audiences we cannot objectively assess the effectiveness of a particular ad — because we receive events for the whole ad campaign.

But there is a solution: test creatives on Android and transfer successful ones to iOS.

Better safe than sorry — and we completely agree with that. Do you still have questions? Let’s talk.