“Correlation doesn’t imply causation.” That’s a phrase most marketers have had to learn the hard way.

In a complex digital ecosystem, a prospect is exposed to multiple channels before a conversion occurs. It makes perfect sense for app marketers to question: “Would the new users have installed our app even without the ad exposure?”

Incrementality testing or uplift measurement helps marketers assess the real impact of their ad campaigns and channels. This blog looks at the need for incrementality testing and how to get started.

This post was first published on revx.io.

Why look beyond the current attribution models?

The digital space is highly interconnected, and it takes a range of touchpoints, channels, and formats to ultimately influence the desired action from the user.

How do attribution models take into account the full picture? They don’t!

They simply fall short of answering a critical question for marketers: how are my campaigns adding value beyond what last click and last view can measure?

That’s why marketers must strive for an attribution system that captures the intricacies of brand-user interaction and intelligently paints the whole picture. Incrementality testing is one methodology that can help marketers justify their advertising effort.

What is incrementality testing?

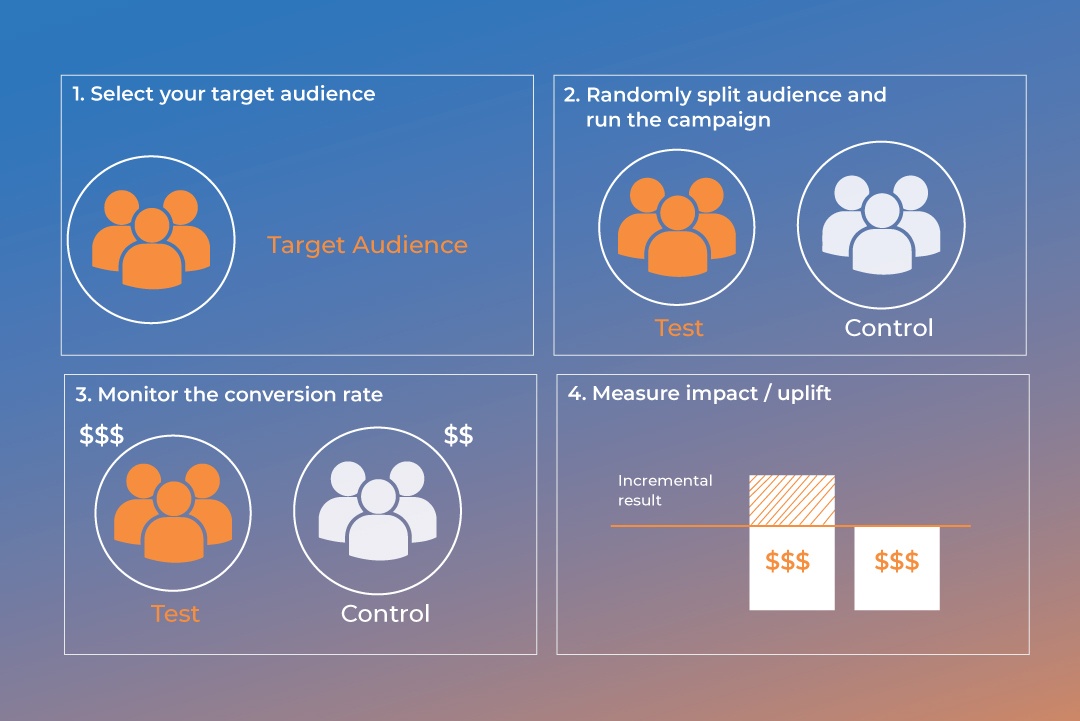

It is a data-driven methodology that measures the direct incremental results of your campaigns by using the principle of Randomized Controlled Trials (RCT).

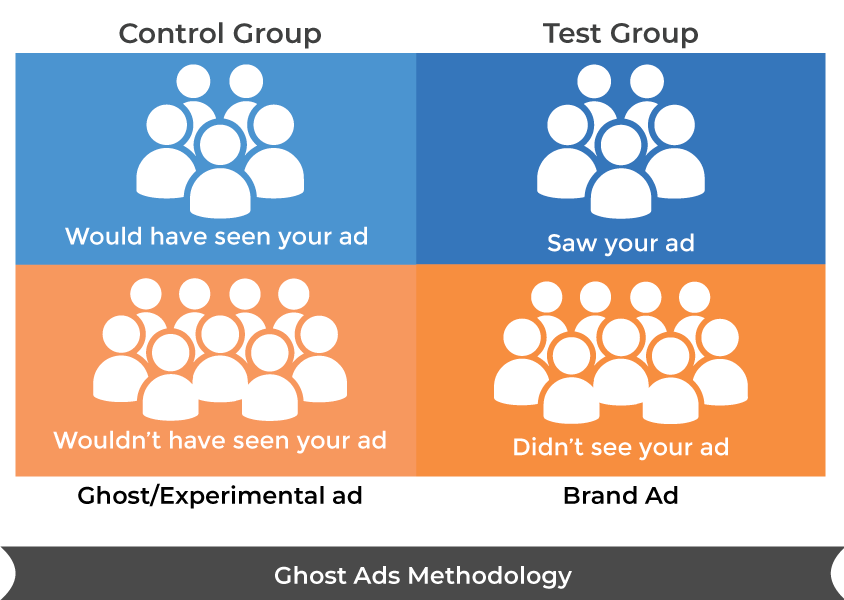

It identifies the differences in behavior between two audience groups – one that has been exposed to the ads (test group) and one that has not been (control group).

Measuring this difference in conversion rates can help you understand the true impact of your ad campaigns.

Incrementality testing

Source: RevX

Incrementality testing uncovers what campaign or channel investments are paying off. It ultimately allows you to eliminate wastage and shift focus towards the real heroes of your advertising mix.

Introduction to the types of methods

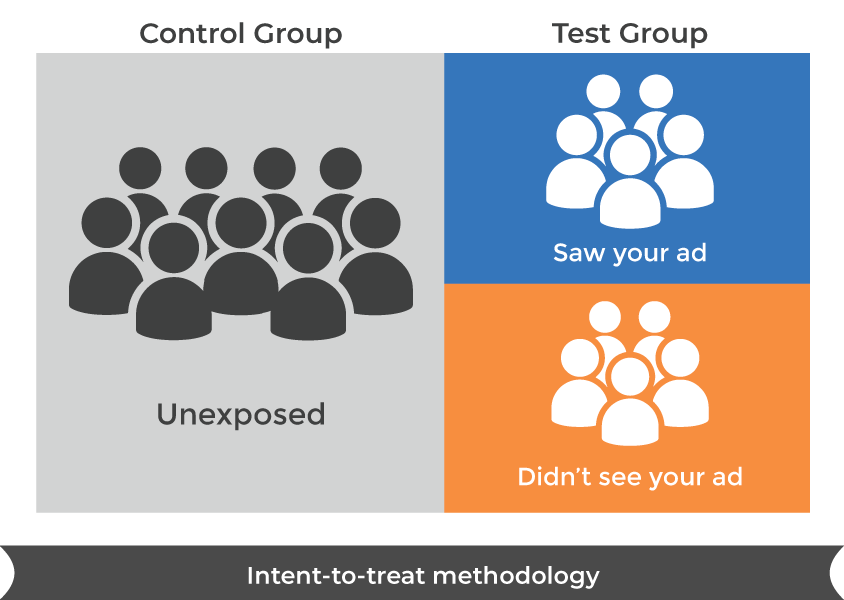

Intent-to-treat method

In this method, a part of the target audience is split into two groups; test and control, with the former group receiving brand ads while the latter is withheld from the same. The premise is simple: the conversion/revenue generated from the test group is compared to that generated from the control group. This allows for a straightforward measurement of the ‘uplift’ from your ad campaigns.

Winning factors:

- Easy to implement and widely used

- No additional cost to the advertiser

Keep in mind: in a programmatic environment, this method doesn’t guarantee that all the test group users are actually exposed to ads since it depends on factors like the win rate and supply availability. However, a sufficiently high exposure rate helps suppress the noise from counting in the results of unexposed users.

Intent-to-treat methodology

Source: RevX

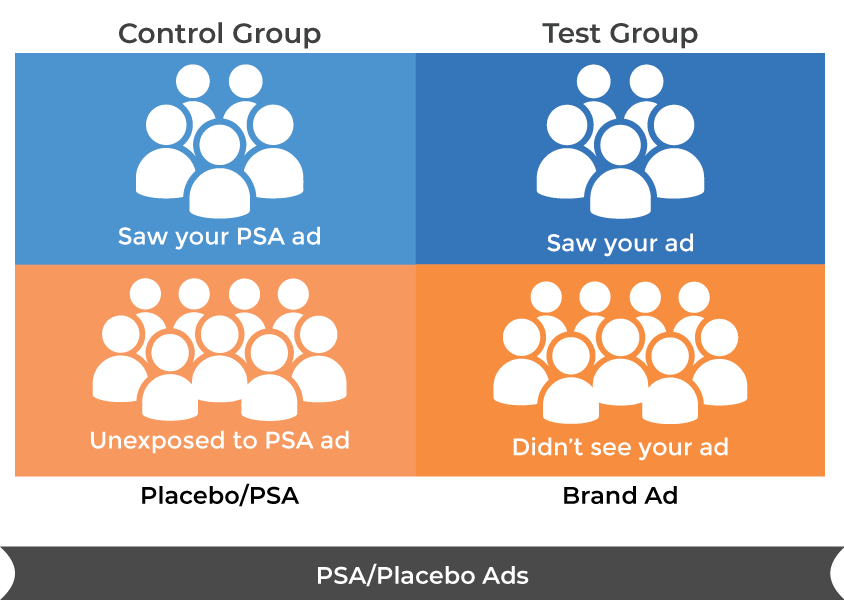

Placebo ads/ PSA ads

In this method, the test group is shown the brand ad, while the control group is shown a PSA ad or a social service ad. By actually serving ads to both the test and control group, you get clear visibility into the users who are exposed to the ads. The uplift is then measured between the test group users who saw the ad vs the control group users who saw the ad.

Winning factors:

- Minimal noise and more reliability

- Easy to implement

Keep in mind: This method includes additional media costs for running the PSA ad to the control group, and the user targeting has to be equivalent in both groups to avoid bias.

PSA/Placebo ads

Source: RevX

Ghost ads

While this method is considered to be a good combination of Intent-to-Treat and Placebo Ads, it is still in its nascence in the Adtech world and lacks trackable transparency. Here, the DSP platform splits the target group into test and control groups only once the bidding takes place.

In other words, ghost bids are used to mark and exclude control group users, and actual winning bids deliver actual brand ads to users in the test group. This means that while the control group does not see any ad, the advertiser has access to the log-level data for when the experimental ad could have been served.

Winning factors:

- No media cost for the control group

- There is little noise since the comparison is between the users who got exposed and those who would have been exposed. The remaining noise would be users where the impression could theoretically not be served.

Keep in mind: While this method is great in theory, it is hard to really verify the ghost bid wins since no platform (except self-regulated networks) has access to the entire auction process.

Ghost ads methodology

Source: RevX