2019 marked a noticeable shift towards users demanding more voice-enabled apps.

This trend is largely due to the increased role artificial intelligence has started to play in users’ everyday lives thanks to voice assistants like Amazon’s Alexa. In fact, the demand for voice-enabled applications will only increase in coming years as many industry experts predict that nearly every application will integrate voice technology in some capacity within the next 5 years. Our recent study, the 2020 Enterprise Mobility Trends Report, found that artificial intelligence (AI) was one of the top five technology investments for enterprises in 2019. So where will AI and voice take us in 2020? This article will take an indepth look into the trends that will define voice enabled apps in the coming years and how this will affect developers in the mobile app development space.

5 Trends for Voice and AI in 2020

Streamlined Conversations

Both Google and Amazon announced in 2019 that their respective voice assistants will no longer require the use of repeated “wake” words. Previously both assistants were dependent on a wake word (Alexa or Ok, Google) to initiate a new line of conversation. For example, one would have to ask “Alexa, what’s the current temperature at the hallway thermostat?” and then have to say, “Alexa” again before requesting that the voice assistant to “set the hallway thermostat to 23 degrees.”

Consumers use voice assistants in specific locations, usually while multitasking, and can either be alone or amongst a group of people when using them. Having devices that can decipher these contextual factors make a conversation more convenient and efficient with these devices, but it also shows that developers behind the technology are aiming to provide a more user-centric experience.

Compatibility and Integration

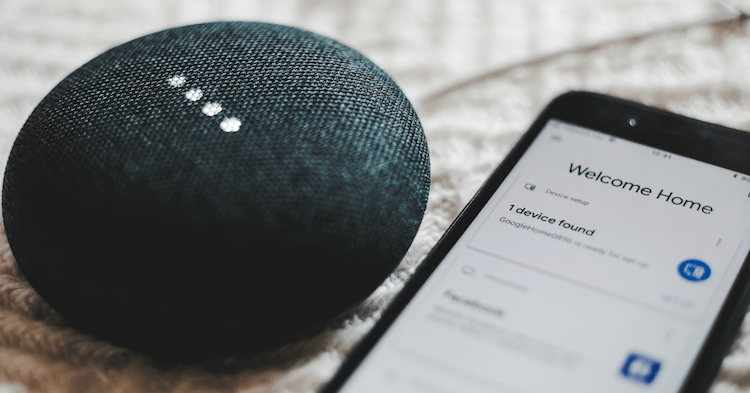

When it comes to integrating voice technology with other products, Amazon has been ahead of the game. Those who use Alexa will be familiar with the fact that the voice assistant is already integrated into a vast array of products including Samsung’s Family Hub refrigerators. Google has finally caught on and has announced Google Assistant Connect. The idea behind this technology is for manufacturers to create custom devices that serve specific functions and are integrated with the Assistant.

In 2020, we will see a greater interest in the development of voice-enabled devices. This will include an increase in mid-level devices: devices that have some assistant functionality but

aren’t full-blown smart speakers. Instead, they communicate with your smart speaker, display or even perhaps your phone over Bluetooth where the processing happens on those devices.

Search Behaviors Will Change

Voice search has been a hot topic of discussion. Visibility of voice will undoubtedly be a challenge. This is because the visual interface with voice assistants is missing. Users simply cannot see or touch a voice interface unless it is connected to the Alexa or Google Assistant app. Search behaviors, in turn, will see a big change. In fact, if tech research firm Juniper Research is correct, voice-based ad revenue could reach $19 billion by 2022, thanks in large part to the growth of voice search apps on mobile devices.

Individualized Experiences

Voice assistants will also continue to offer more individualized experiences as they get better at differentiating between voices. Google Home is able to support up to six user accounts and detect unique voices, which allows Google Home users to customize many features. Users can ask “What’s on my calendar today?” or “tell me about my day?” and the assistant will dictate commute times, weather, and news information for individual users. It also includes features such as nicknames, work locations, payment information, and linked accounts such as Google Play, Spotify, and Netflix. Similarly, for those using Alexa, simply saying “learn my voice” will allow users to create separate voice profiles so the technology can detect who is speaking for more individualized experiences.

Voice Push Notifications

Using user-centric push notifications as a means to re-engage users with an app, voice technology presents a unique means of distributing push notifications. As a way to increase user engagement and retention, push notifications simply remind users of the app and display relevant messaging to the user. Now that both Google Assistant and Amazon’s Alexa allow the user to enable spoken notifications for any third-party app that has the compatibility, users can hear notifications rather than read them. These notifications are generally related to calendar appointments or new content from core features.

What Does This Mean for Developers?

Voice technology is becoming increasingly accessible to developers. For example, Amazon offers Transcribe, an automatic speech recognition (ASR) service that enables developers to add speech-to-text capability to their applications. Once the voice capability is integrated into the application, users can analyze audio files and in return, receive a text file of the transcribed speech.

AdTech is changing — it’s time to find out how

AI, privacy shifts, and new monetization models are transforming the app industry. Learn more about these changes and other app marketing insights in the AdTech Trends 2025 Playbook by Yango Ads.

[Download Playbook]Google has made moves in making Assistants more ubiquitous by opening the software development kit through Actions, which allows developers to build voice into their own products that support artificial intelligence. Another one of Google’s speech-recognition products is the AI-driven Cloud Speech-to-Text tool which enables developers to convert audio to text through deep learning neural network algorithms.

Voice Is the Future of Brand Interaction and Customer Experience

Voice has now established itself as the ultimate mobile experience. In 2020, voice-enabled apps will not only accurately understand what we are saying, but how we are saying it and the context in which the inquiry is made.

There are still a number of barriers, however, that need to be overcome before voice applications will see mass adoption. Technological advances are making voice assistants more capable particularly in AI, natural language processing (NLP), and machine learning. To build a robust speech recognition experience, the artificial intelligence behind it has to become better at handling challenges such as accents and background noise. And as consumers are becoming increasingly more comfortable and reliant upon using voice to talk to their phones, cars, smart home devices, etc., voice technology will become a primary interface to the digital world and with it, expertise for voice interface design and voice app development will be in greater demand.

To gain more insight into and enterprise mobility trends, read Clearbridge Mobile’s recent study – the 2020 Enterprise Mobility Trends Report