As of the second quarter of 2019, Statista shows that over 25% of global in-app ad impressions served programmatically were fraudulent. Add to the mix that, for a long time, click injection, also known as click hijacking, has been one of the most popular CPI ad fraud types out there.

Cue ad fraudsters, who just like sewer rats, are making the most out of this reality as the world is in a current state of turmoil. They take advantage of the fact that budgets have been reduced dramatically in the advertising industry, which jeopardizes the amount allocated to anti-fraud protection.

Not even tech giants are off-limits. In August 2019, Facebook filed a lawsuit against two mobile app developers for click injection fraud, where malware was being installed in users’ phones to create fake clicks on Facebook ads, making it look as if users had actually clicked on the ads.

It’s a vicious cycle that goes on and on, draining marketing budgets along the way. In fact, for 2020, $16.1 billion is expected to be lost at the hands of fraudsters. Given the current situation, this number is expected to be much higher.

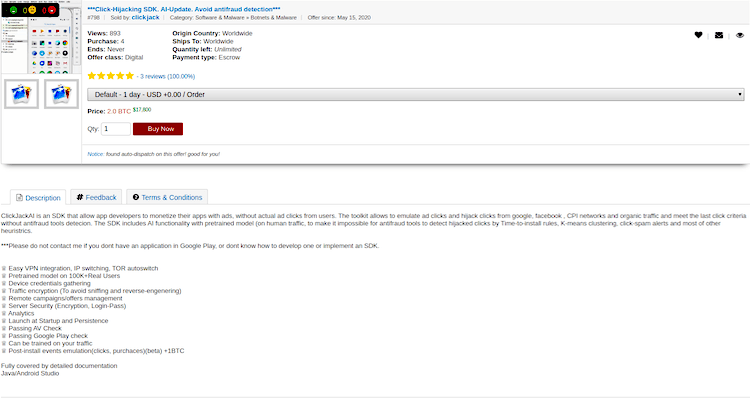

Recently, Scalarr’s Research Team found a malicious SDK for advanced and AI-powered click injection being sold in the Dark Web as of May 15, 2020.

The malicious SDK example

While it is not the first time criminals use the Dark Web to sell CPI fraud tools, it is the first time these types of advanced tools powered by AI technology are up for sale to the public for a fairly low price.

According to the description in the marketplace, the SDK is for Android devices only and includes a pre-trained AI model for click hijacking. The model itself is trained on human-generated traffic.

The product listing boasts about the ability to monetize apps with ads without actual ad clicks from users. It also offers the ability to emulate ad clicks and hijack clicks from Google, Facebook, organic traffic, etc.

“Fraudsters are stepping into more advanced territory. They have now adopted technology that literally puts billions of advertisers’ budgets in high risk, as well as respected CPI networks and MMP providers,” said Inna Ushakova, Scalarr’s CEO and Co-founder, “AI advancements allow them to trick rule-based filters with ease, and keep their activity undetected for a long time.”

Additional features include easy VPN integration, IP switching, a TOR auto-switch, and traffic encryption that makes it harder to reverse-engineer anti-fraud criteria, to name a few.

Context-aware tech: The secret to 81% more conversions

Learn how leading apps are using context-aware technology to deliver perfectly-timed offers, reduce churn & transform passive users into loyal fans.

Learn moreThe toolkit’s use of AI technology is one step further into a worrisome level of sophistication expected from fraudsters. It can bypass time-to-install rules, K-means clustering, click spam alerts, and other heuristics widely used by simple anti-fraud tools.

Interestingly enough, the toolkit also allows fraudsters to further train models using a different dataset; and it can all be fully managed remotely.

Scalarr researchers contacted the seller, making it look as if they were going to purchase the toolkit. The seller then proceeded to add more alarming details in personal conversation.

He revealed that he had developed the algorithm to evade most anti-fraud prevention and detection tools for his own apps. He used AI since it’s the only way to learn from human traffic and mimic it naturally, not just algorithmically or randomly.

The seller sent test data from a working prototype so the research team could check it with anti-fraud solutions. With this information on hand, the Scalarr team managed to split fraud from genuine traffic but was thoroughly surprised at how difficult the task was.

The team had to delve into refining the most advanced Deep Learning algorithms to avoid false-positive results and only show fraudulent installs.

“When you have access to organic user data and can see how they play, then you could train advanced ML algorithms to simulate activity just like in genuine traffic,” said Borys Pratsiuk, CTO at Scalarr, “events distribution combined with user data manipulation allows bad actors to generate fraud patterns for impressions/clicks injections from malicious applications.”

This is a step up for fraudsters who, for now, have found a way to prevail over some anti-fraud solutions. This threatening practice puts the entire CPI traffic procurement industry in grave danger, especially in times where advertisers are losing users in some app categories and operating costs are cut down.

While it’s too soon to calculate the total impact and losses of the emergence of these AI-powered tools to bypass anti-fraud solutions, it’s safe to say that the numbers are in the billions.